Mad about Science: GPUs

By Brenden Bobby

Reader Columnist

Author’s note: The numbers referenced in this article are a reflection of the Nvidia 30 series graphics cards.

GPUs, or graphics processing units, have made headlines with the advent of cryptocurrency mining and AI datacenters. If you’re a PC gamer, you’re probably familiar with graphics cards and their absurd price tags — but why are they important? How do they work? Why do they cost so much? Math. That’s really it. Lots and lots of math.

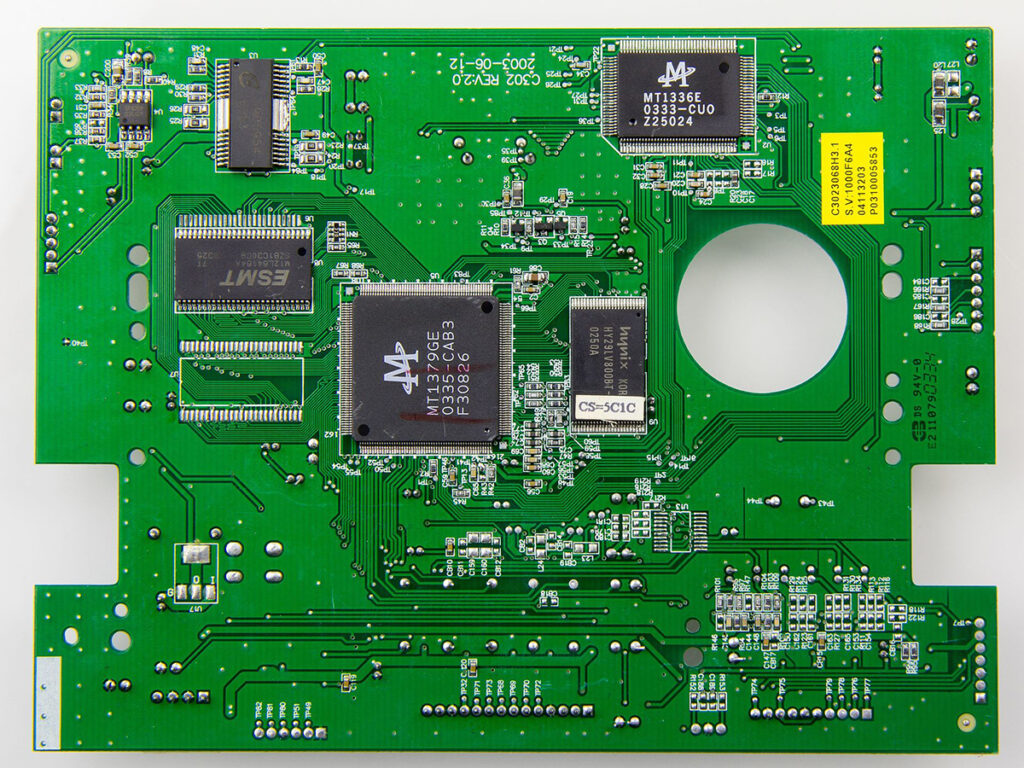

Let’s look into how a graphics card is made and explore its components. At the core of a graphics card is the PCB, or printed circuit board — this is what all of the other components sit on. At the center of the PCB is the GPU, which is effectively its “brain” and will perform the calculations needed to accomplish the task it’s been given.

Graphics cards were developed to perform the tens of trillions of calculations per second required to create graphical environments for video games, such as how light and geometry interact to create realistic effects in a simulated environment. That’s why they are so highly sought after in crypto mining and AI fields, but we’ll cover that later.

At a glance, the GPU just looks like a little golden square. Really, it’s a structure of more than 28 billion transistors packed into a tiny area. The GPU is structured in a hierarchical way, similar to how a corporation like Walmart may be structured. In this analogy, the GPU is Walmart as a whole, which is then broken down into seven graphical processing clusters, or GPCs, which are like districts on a map. Each GPC is broken into 12 streaming multiprocessors or SMs, which can be thought of as stores within a district. Within each SM there are four warps and one ray tracing core. You can imagine the warps as store managers and the ray tracing cores as specialized employees designated to perform a very specific and special task. Each warp contains 32 CUDA cores and one tensor core. You can imagine the CUDA cores as employees and the tensor cores as a personal shopper. Adding all of those together gives you 10,752 CUDA cores, 336 tensor cores and 84 ray tracing cores.

While the analogy makes sense of the structure of the chip, it doesn’t clarify what these cores actually do. CUDA cores are for basic arithmetic calculations, primarily addition and multiplication using binary numbers. These are the most plentiful and do the heavy lifting when it comes to building video game environments.

Tensor cores are matrix multiplicators that deal with geometric deformation, such as a wall changing shape as you get close to it, but are also invaluable for use in AI neural networks.

Ray tracing cores simulate the behavior of light to create highly realistic interactions with objects within the game environment by generating light rays and calculating how they will bounce and refract off a variety of surfaces. Your brain does this organically, though it doesn’t have to worry about generating the light like the ray tracing core does.

You may be wondering if all of this is packed into such a small little square, why are graphics cards so huge and bulky?

Using electrical current to perform 35 trillion calculations per second generates a lot of heat. Left unchecked, this heat could cause deformations and permanent damage to the chip, so engineers need to be able to cool down the chip appropriately. This is done in a very similar manner to how we cool anything else, such as your car, house and even, in some cases, your refrigerator.

Air cooling is the most common way to cool down a GPU, which involves fans that pull cool air in and spit hot air out another vent. While common, this is also easily compromised by dusty areas that can clog up the fan and even present a fire hazard over time. Liquid cooling is more common in high-performance cards, which uses a cooling solution that circulates through the system, absorbing heat from the GPU and transferring it via liquid to a radiator similar to your car. A hybrid system utilizes both air circulation as well as liquid cooling, but sometimes requires more work to build and maintain.

You may be wondering why would we use central processing units (CPUs or processors) with fewer cores and less processing power than GPUs in our computers — why have both when we could just have more GPUs?

GPUs are great for processing bulk amounts of “simple” algorithmic data. Think of it like a cargo train carrying a lot of containers. It’s efficient at what it does, but it’s expensive and it’s not as adaptable as a CPU, which can be more agile and focus on many smaller tasks simultaneously with more complexity and precision. Your CPU is great for juggling lots of smaller computational tasks like running browsers, excel sheets and other programs, while your GPU is great at processing huge amounts of bulk data.

This is why AI datacenters prioritize utilizing banks of specially designed GPUs to process huge amounts of data and find optimized results for whatever task they’ve been given. If you thought your $1,000 graphics card was expensive, many of these datacenters will have an entire cabinet filled with dozens of $40,000 GPUs stacked on top of each other.

Stay curious, 7B.

Coming up this week! Don’t miss Live Music, the Summer Sampler, the Art Party, Monarch Grind, the Sandpoint Renaissance Faire, and more! See the full list of events in the

Coming up this week! Don’t miss Live Music, the Summer Sampler, the Art Party, Monarch Grind, the Sandpoint Renaissance Faire, and more! See the full list of events in the